Mastering Massive Files: Tips, Tricks, and Tools for Data Engineers

In the world of data engineering, handling huge files is a common challenge. These massive datasets can push the limits of your hardware and software, making even simple tasks like viewing or editing files a daunting prospect. In this article, we’ll explore some essential tips, tricks, and tools to help you efficiently manage and process huge files in your data engineering projects.

1. Viewing Large Files Without Freezing Your System

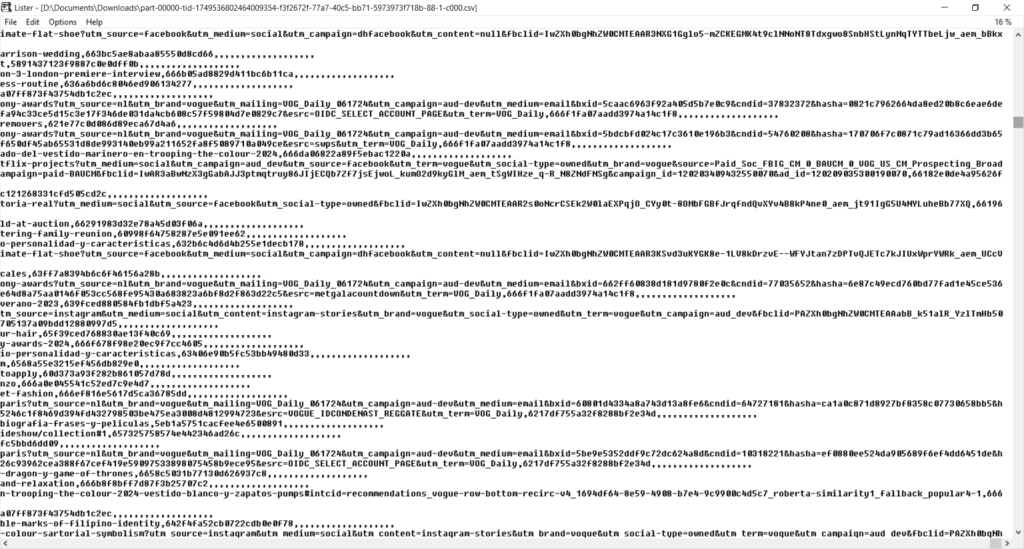

Windows: Lister

- Tool: Lister (https://www.ghisler.com/lister/ or mirror)

- Why it’s useful: Allows you to easily view large files without loading the entire file into memory. Imagine a simple notepad read-only app, made for huge files.

- How to use:

- Download and install Lister

- Right-click on the large file and select “Lister” to open

- Navigate through the file using Lister’s efficient interface

Linux: Command-line Tools

- Tools:

less,head,tail - Why they’re useful: These tools allow you to view parts of a file without loading it entirely

- How to use:

less filename: View file contents page by pagehead -n 100 filename: View the first 100 linestail -n 100 filename: View the last 100 lines

2. Editing Large Files

Linux: Text Editors for Large Files

- Tools:

vim,emacs - Why they’re useful: These editors can handle very large files efficiently

- How to use:

vim largefile.txt: Open the file in Vimemacs -Q --eval '(find-file-literally "largefile.txt")': Open in Emacs without loading the entire file

Linux: Bash scripts

- Tools:

sed,awk - Why they’re useful: These tools can handle very large files with minimal resources. TIP: LLM Chatbots like Claude & ChatGPT are great at helping you come up with the correct command.

- How to use:

Use sed to replace /” string with “” and save the result to a new file

sed 's/\/"/""/' "test.csv" > "${test%.*}_edited.csv"

Process the file in batches with sed

file="test.csv"

base_name="${file%.*}"

extension="${file##*.}"

temp_dir=$(mktemp -d)

# Split the file into smaller chunks

split -l 10000 "$file" "${temp_dir}/chunk_"

# Process each chunk

for chunk in "${temp_dir}"/chunk_*; do

sed 's/\/"/""/g' "$chunk" > "${chunk}_processed"

done

# Combine processed chunks cat "${temp_dir}"/*_processed > "${base_name}_edited.${extension}"

# Clean up temporary files

rm -rf "$temp_dir"

Process the file in batches with sed

3. Processing Files in Batches

Python: Chunking with Pandas

- Why it’s useful: Allows processing of large CSV files without loading the entire file into memory

- How to use:

import pandas as pd

chunksize = 100000 # Adjust based on your system's memory

for chunk in pd.read_csv('large_file.csv', chunksize=chunksize):

# Process each chunk

process_data(chunk)

Hadoop Ecosystem: Distributed Processing

- Tools: Hadoop, Spark

- Why they’re useful: Distributes processing across multiple nodes

- How to use:

- Set up a Hadoop or Spark cluster

- Use tools like Hive or Spark SQL to query and process large datasets

4. Compression Techniques

Gzip Compression

- Why it’s useful: Reduces file size for storage and faster processing

- How to use:

- Compress:

gzip largefile.txt - Decompress:

gunzip largefile.txt.gz

- Compress:

5. Streaming Processing

Python: Iterating Through Large Files

- Why it’s useful: Processes files line by line without loading entire file into memory

- How to use:

with open('large_file.txt', 'r') as file:

for line in file:

# Process each line

process_line(line)

6. Cloud-Based Solutions for Processing Large Files

Google Cloud’s Dataflow

Google Cloud’s Dataflow is a fully managed service for executing Apache Beam pipelines within the Google Cloud Platform ecosystem. It’s particularly useful for processing large files and datasets.

Key features:

- Serverless: No need to manage infrastructure

- Auto-scaling: Adjusts resources based on workload

- Batch and streaming: Handles both batch and real-time data processing

- Built-in templates: Offers pre-built templates for common data processing tasks

Example use case:

import apache_beam as beam

from apache_beam.options.pipeline_options import PipelineOptions

options = PipelineOptions()

with beam.Pipeline(options=options) as p:

lines = p | 'ReadLargeFile' >> beam.io.ReadFromText('gs://your-bucket/large-file.txt')

processed = lines | 'ProcessData' >> beam.Map(lambda x: x.upper())

processed | 'WriteResults' >> beam.io.WriteToText('gs://your-bucket/output')

Other options include:

- Amazon EMR (Elastic MapReduce): Amazon EMR is a cloud-based big data platform for processing vast amounts of data using open-source tools such as Apache Spark, Hive, HBase, Flink, and Presto.

- Azure HDInsight: Azure HDInsight is a fully managed, open-source analytics service for enterprises. It makes it easy to process massive amounts of data with open-source frameworks.

7. Database Solutions for Large Datasets

If you can upload the data directly to a DB (because sometimes you will need to clean them up before you can), it will make it much easier for you to work with them.

PostgreSQL

PostgreSQL is a powerful, open-source object-relational database system that can handle large datasets efficiently.

Key features for large datasets:

- Partitioning: Divide large tables into smaller, more manageable pieces

- Parallel query: Utilize multiple CPU cores for faster query execution

- JSONB data type: Efficiently store and query semi-structured data

- Foreign data wrappers: Query external data sources as if they were PostgreSQL tables

MongoDB

MongoDB is a popular NoSQL database that excels at handling large volumes of unstructured or semi-structured data.

Key features for large datasets:

- Sharding: Distribute data across multiple machines

- Indexing: Create indices to speed up queries on large collections

- Aggregation pipeline: Perform complex data transformations and analyses

- GridFS: Store and retrieve large files efficiently

8. Efficient File Formats for Big Data

When working with large datasets, choosing the right file format can significantly impact processing speed and storage efficiency.

Apache Parquet

Parquet is a columnar storage file format designed for efficient data processing and storage.

Key benefits:

- Columnar format: Allows for efficient querying of specific columns

- Compression: Reduces storage space and improves I/O

- Schema evolution: Supports adding or removing columns over time

- Predicate pushdown: Improves query performance by filtering data at the storage level

Example of reading a Parquet file with Python:

import pandas as pd

df = pd.read_parquet('large_dataset.parquet')

Apache Avro

Avro is a row-based storage format that offers schema evolution and efficient serialization.

Key benefits:

- Schema evolution: Allows for changes to the data schema over time

- Language-agnostic: Supports many programming languages

- Compact: Efficient binary serialization

- Splittable: Supports parallel processing of data files

Example of writing data to Avro format with Python:

from avro import schema, datafile, io

schema = {

'name': 'LargeDataset',

'type': 'record',

'fields': [

{'name': 'id', 'type': 'int'},

{'name': 'name', 'type': 'string'},

{'name': 'value', 'type': 'float'}

]

}

with datafile.DataFileWriter(open('large_dataset.avro', 'wb'), io.DatumWriter(), schema) as writer:

writer.append({'id': 1, 'name': 'Item 1', 'value': 10.5})

writer.append({'id': 2, 'name': 'Item 2', 'value': 20.0})

9. Inspect file snippets in case of error

When trying to import files, it’s common to get error referrencing a specific byte offset. You can use tools like sed or awk to review those exact problematic parts of the document.

For instance, check the following BigQuery import error:

Error while reading data, error message: CSV table references column position 23, but line contains only 20 columns.; byte_offset_to_start_of_line: 16955292992 column_index: 23 column_name: "count_domain_sess..." column_type: STRING File: gs://incoming-data/raw_data/data_01.csv

The following bash command will show you the last 5 lines before the problematic area, which might help identify the issue:

gsutil cat gs://incoming-data/raw_data/data_01.csv | head -c 16955292992 | tail -n 5

10. Use Cloud VMs

When working with large files don’t straing your PC and you internet connection, just spin up a cloud VM (e.g. GCP Compute engine instance) to benefit from a hardware setup tailored to your exact needs (i.e. disk space, RAM, CPU) that will be available only as long as you are working on the task.

Plus you will also benefit from much wider bandwidth when uploading/downloading files. For instance when downloading files from S3 on Compute engine using aws s3 cp I am able to reach average speeds of 150MB/sec!

Conclusion

Handling huge files is an essential skill for data engineers. By employing these tools and techniques, you can efficiently view, edit, and process files larger than 10GB without overwhelming your system resources. Remember to always consider the specific requirements of your project and the characteristics of your data when choosing the best approach.