Crack the LLM Code with These Must-Know Tips & Tricks!

Are you working with LLMs and struggling to keep up with everything that’s going on? Below is a list of my favorite and above all actionable tips & tricks that can help you reach faster the milestone of producing a production-ready app or chatbot powered by LLMs.

Finetuning

Once you have determined that fine-tuning is the right solution (i.e. you’ve optimized your prompt as far as it can take you and identified problems that the model still has), you’ll need to prepare data for training the model. You should create a diverse set of demonstration conversations that are similar to the conversations you will ask the model to respond to at inference time in production.

To fine-tune a model, you are required to provide at least 10 examples. We typically see clear improvements from fine-tuning on 50 to 100 training examples with gpt-3.5-turbo but the right number varies greatly based on the exact use case.

We recommend starting with 50 well-crafted demonstrations and seeing if the model shows signs of improvement after fine-tuning. In some cases that may be sufficient, but even if the model is not yet production quality, clear improvements are a good sign that providing more data will continue to improve the model.

For more feedback on how to fine-tune your models check OpenAI’s guide

Prompt libraries & Cookbooks

Looking for inspiration and ideas on how to prompt an LLM? There are several repositories online with collections of prompts that can help you understand how to approach a certain issue and the possibilities available. A few good places to start are the following:

- Langchain’s prompt hub contains several prompts targeting technical issues (like having an LLM communicate with APIs, getting an LLM to review the schema of a database and find relevant tables, etc.)

- Use Promptify to improve your prompts by submitting yours and getting automated suggestions on how to make them more detailed.

- OpenAI’s cookbook repo shares example code for accomplishing common tasks with the OpenAI API. Most code examples are written in Python, though the concepts can be applied in any language.

- Google’s Gemini cookbook

- Anthropic’s Claude cookbook

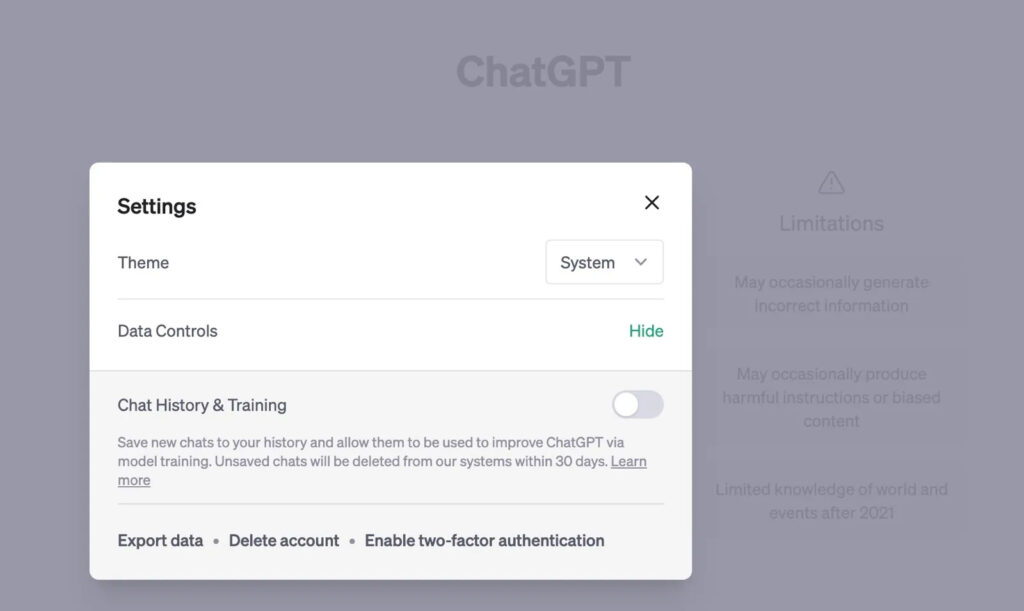

Privacy

Keep in mind that by default everything you send to ChatGPT’s UI is recorded by OpenAI and can be used for the model’s improvement. You can still opt out, but you will need to say goodbye to several ChatGPT features (e.g. long history of past prompts, Advanced Data Analysis, etc.).

Using the API or the enterprise version is a different story, as you retain the ownership of your data and they will not be used to train and improve models.

Keeping up with the news

With the current rate of new features, tools, and techniques getting released it’s extremely challenging to stay up-to-date. Below are the most useful resources I use to keep up with the news.

- The neuron newsletter

Great and (most importantly) original content, easy to read, and always up-to-date with the most important news around AI and LLMs. Covers a few technical points but it usually stays on a higher level. - Langchain’s YouTube & Discord channel

You will get the chance to discuss and see the struggles of other people working with LLMs but also hear discussions of people who built products on top of LLMs. Most of the discussions are around LangChain but keep in mind that LangChain is just a layer on top of LLMs. - Latent space

“A technical newsletter, podcast, and community by and for the rising class of AI Engineers, focusing on the business and technology of this new AI summer.” I love the content and will get you to think of several AI aspects in a more philosophical way.

Increase response accuracy

Chatbots can hallucinate and this is not good, as sometimes it’s difficult to spot can lead to incorrect results. Below is a list of hacks to minimize hallucinations and increase your accuracy:

- Divide your tasks into highly specific yes/no questions, such as “Did Q3 witness an increase in marketing expenses compared to Q2?”

- Instruct your chatbot to clearly say, “I don’t know the answer when you don’t have enough content” in your prompt.

- Instruct your chatbot to substantiate any insights derived from data with supporting evidence.

- Keep your AI agents focused on specific tasks. Remember, Focused prompts, focused tools.

- Don’t just give good examples when creating prompts. Give both good and bad examples and explain why the bad is bad. (Source)

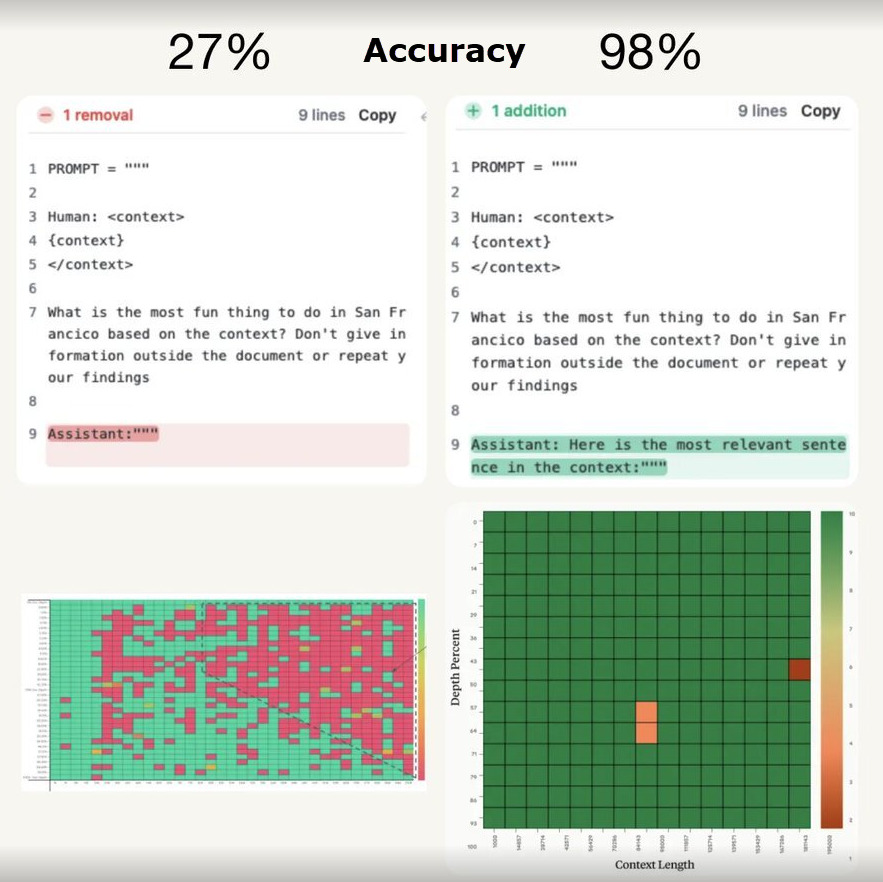

- Even small changes in the prompt (especially for models with longer context windows) can lead to huge accuracy improvements (Source)

And always verify the chatbot’s answers. LLM Hallucination’s are real and can severely impact the quality of the responses.

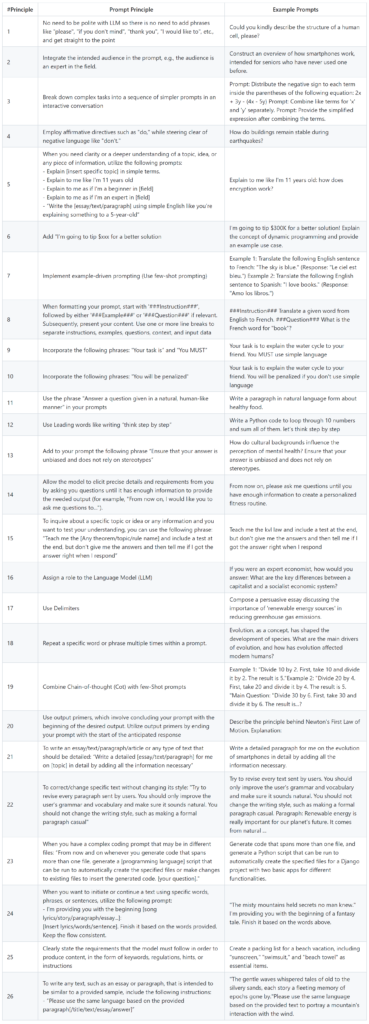

Keep in mind also the below list of prompt principles from VILA-Lab’s ATLAS project, to improve the quality of LLM responses by 50%!

Foster LLM adoption in your company

According to research most employees are still not using or are not sufficiently familiar with LLMs. Looking for ways to improve that? Why not try one (or more) of the following tricks to bring them on board faster:

- AI Prompt Hub: Establish a central hub for employees to share and discuss prompts used in their daily workflows. A simple Google Doc will suffice.

- AI Innovation Day: Host a hackathon dedicated to AI, encouraging cross-functional teams to brainstorm and develop AI-driven solutions for company processes.

- AI Brainstorm Depot: Launch an AI suggestion platform, either online or an in-person meetup, where employees can submit their ideas for AI solutions.

- ChatGPT Plus Coverage: Offer reimbursement for ChatGPT Plus subscriptions to all employees, while ensuring robust security measures are in place.

- Trigger AI Discussions: Promote the exchange of AI resources, guides, and articles within your Slack or Teams channels, beginning with the enthusiastic support of senior leadership.

Monitor and QA apps built on top of LLMs

You can’t improve what you can’t measure! There are a few options you can use on top of your LLM-powered app to monitor its accuracy, token consumption, and returned responses. This way you can easily debug and evaluate your app’s performance.

My go-to tools for this purpose are

- LangSmith: LangSmith is a platform for building production-grade LLM applications.

It lets you debug, test, evaluate, and monitor chains and intelligent agents built on any LLM framework and seamlessly integrates with LangChain, the go-to open-source framework for building with LLMs.

LangSmith is developed by LangChain, the company behind the open-source LangChain framework. - LangFuse: Similar to LangSmith, Langfuse is an open-source product analytics suite for LLM apps.

- Context.ai: Context is a product analytics tool for AI chat products.

With Context, you can understand how your users are interacting with natural language interfaces. This helps you know where your customers are having great experiences, but also proactively detect potential areas of improvement. We also help you to see where there are inappropriate conversations taking place, and provide feedback on managing these.

Use LLMs in BigQuery

If you are already working with BigQuery you can process your data using Google’s LLMs just by using SQL. For more details check out the chapter “Use LLMs in SQL” in my article BigQuery: Advanced SQL query hacks.